I attended the PASS (Professional Association of SQL Server) Summit in Early November. I attended 2 pre-conferences on Monday and Tuesday.

Monday: SQL Server Security by Denny Cherry

Tuesday: Fixing Query Performance Problems from Estimates, Statistics, Heuristics, and Cardinality by Kimberly Tripp

This time I also participated in Speaker Idol competition.

I attended the regular sessions on Wednesday, Thursday and Friday.

Here is the brief summary of what I learned.

Monday 11-05-2018 (pre-conference)

SQL Server Security

Speaker: Denny Cherry

SQL Server security does not begin with SQL Server itself. It begins at your organization’s infrastructure.

Network Design:

This depends on what a company want to use; public IP or private IP addresses. Private IPs became more important when cloud infrastructure came into the picture. In the past, hackers used to exploit the public IP\DNS names and would broadcast messages but this problem is mostly gone. Additional protection has been put on.

Private IPs: 192.168.0.0 typically used for home networks, 172.16.0.0 and, 10.0.0.0 typically used by business networks.

Public IPs: Any IP address not part of the Private IP address is a public IP.

VLANS: Virtual Local Area Networks. This is great for segmenting SQL Server network and saves money on switches. A downside is that things need to be configured correctly the first time. A good strategy is to block all ports on web servers, only 1433 open to talking to SQL Server.

ACL (access control list) works in tandem with firewalls. ACL works on new access, not on the existing one. If there is a lot of access to configure, it is better to use the firewall to block everything and poke holes (swiss cheese) to let the stuff through.

Switches have ports which are connected to email servers, database servers and web servers. On top of the firewall is NAT (network address translation) layer to allow to talk to machine outsized of your network.

There are 3 types of network design. Each has its own benefits and shortcomings.

- Everything behind the router

- Web servers in front of the router

- Web server in DMZ (VLAN)

On each server, configured to block all access but required services. Inbound firewall is blocked by default. For best practice block public IP from outbound traffic. No matter what port number you are using for SQL Server. Web server web config will have that information so hacker scanning will get it. If your web config is encrypted only then it is true protection from a hacker.

Accessing SQL Server via the internet is not recommended. There are lots of VPN options available. Desktop sharing app should be regularly monitored because an unknowing employee can leave a back door open after one of the session. Your first layer of security is VPN.

Physical Server Security:

How are people allowed into your data center located at your business? The cleaning crew has access? Is there dual authentication to enter? E.g.; pin + biometric. Does alarm sound if accessed outside of defined and approved times?

Screensaver on company computers to have the company’s log (enforced by group policy) is a good practice.

Social Engineering is the easiest way to gain access to data. Need to train your people who have access to data.

Cloud security is as secure as you want it to be. Inherently cloud is not more or less secure than on-premises environments. Some companies require firewalls from 2 different companies if one fails other kicks in. Some companies require logins to the cloud only work from the office. You can bypass default Azure routing and can define your own. Microsoft or any other vendor (AWS, Google) cannot see your data in the cloud. Data is encrypted at the storage level. Active Directory account is required to access your data. Microsoft especially is taking data security very seriously. The engineers who designed the system are not allowed in Data Centers where they can identify which servers hold which company’s data.

With increased security comes challenges. For example, California is implementing GDPR kind regulation in 2019. Everyone has right to be forgotten after website visit. It is a double edge sword. Now forgotten customer cannot pay their bills online.

Data Encryption Options:

- Application Encryption: Could be .net encryption or could be Always Encrypted. AE is the best, data is protected all the way. Introduced in SQL server 2016. Only small change in the application connection string “Column Encryption Setting=enabled”

- SSL Encryption: SQL Server offers SSL protection for transit data. No client change is needed. It is configured via SQL Server Configuration Manager. Availability group can use different certificates on each replica.

- Database Encryption: This is commonly known as column level encryption. This type does not require application code change. All encryption workload is centralized on the database server. Great solution for protecting PII data.

- Storage Encryption: TDE (transparent data encryption) encrypts data at rest (at disk level). Data within the table is still plaintext. Backup files are protected and cannot be restored on another server without the proper encryption key. TDE disables instant file initialization. TDE will not encrypt Filestream data. To protect Filestream use bit locker.

Data Theft is not a matter of if, it’s a matter of when. Check Ihavebeenpawned.com. The biggest concern for the company is the reputation.

Data Classification is based on your business and based on what data is stored.

- General Data

- Low Business Value data

- Medium Business Value data

- High Business Value data

- PII

Business users have to identify the data classification. DBA should not be driving it. DBA help business to accomplish it. SSMS 17.5 has data classification available. It only looks at column names and does not comb the data.

How can you protect the data if someone takes the picture or screenshot of PII data? None of the encryption methods we discussed so far will work in this case.

Protecting Password:

.NET apps can encrypt the password in config file when the first time app connects to DB.

Domain policies are guaranteed to protect Windows accounts. There is no guarantee domain policies will protect SQL accounts.

SQL CLR runs in a separate memory space from SQL Server binaries. MS supports to run it in SAFE mode external. An unsafe assembly can call any managed code. CLR may have security issues, therefore, this feature is gone from Azure. Text manipulation works better in CLR then SQL.

To protect TDE keys, keep on-prem preferably few copies, easier central location. Other copy put on USB and give it HR to keep in a fire-proof safe. Never store with backup.

Anti-Virus:

SQL Server should not need an anti-virus running on the server. Anti-virus product can cause CPU issues, IO issues and RAM issues. Their behavior may change over time. Affects SQL server performance. To check if anti-virus is installed, Select * from sys.dm_os_loaded_module.

Tuesday, 11-06-2018 (pre-conference):

Fixing Query Performance Problems from Estimates, Statistics, Heuristics, and Cardinality

Speaker: Kimberly Tripp (of SQLSkills.com)

The first step is to find out that Query really has a problem. There are many tools out there to do that. This session is about what to do after that. The first step is to identify if it is a statistics not updated on the column issue or plan caching issue. This is very important because based on that you will apply the remedy.

In lots of instances, statistics are not a problem but gets blamed anyway. Sometimes caching and parametrizing is the problem.

Generalized and Simplified Query execution steps:

- Parsing (syntax checking)

- Standardization /algebrization (Optimizer looks at the predicate and pushes down the tree to select the correct index if exists)

- Cost-based optimization (chooses the good plan faster not the best plan)

- Compilation

- Execution

Cost-Based Optimization: More statistics on a table is not a bad thing. Optimizer’s job is not to find the best plan but to find good plan faster. It sometimes re-writes your query to lower the cost. Calculates and estimates how much data it is going to process before processing it.

In the following example, the optimizer will use an index for the second query.

- Where monthly_salary X 12 = Value (maybe written in haste in an Adhoc query, cost higher)

- Where monthly_salary = Value / 12 (uses index)

How the plan caching occurs? One way is when you are testing your procedure with certain parameters over and over and after your final change forget to clear the plan. The optimizer chooses (faster) existing plan in cache for different parameters and results in the performance problem.

Solution: use Execute procedure WITH Recompile. It recompiles the whole procedure, not just the statements inside the proc having trouble. Instead, use OPTION (Recompile) at the statement level. Less harsh.

A single query can have > 10 plans just because ANSI settings or spaces (SET OPTIONS) are different.

To find out session settings run this. Select * from sys.dm_exec_sessions where user_sessions =1

Selectivity: plan also varies based on what percent of data a query is returning. Lots of narrow non-clustered indexes are bad because they are non-selective. The tipping point between an index scan and seek is anywhere between ¼ pages to 1/3 pages of the table.

How to see the statistics?

- Dbcc show_statistics

- Sp_autostats tablename

Index density: If there are 10,000 rows and index is on last name column, say 400 rows return for a particular last name. But if an index is not narrow and contains last name and first name combo maybe 5 rows returned. It is more selective.

The histogram has 201 total steps (included 1 row for NULLs)

When the statistics were last updated?

Use stats_DATE (object_Id, index_id) in a query.

Demo: use the Credit database and Kimberly’s script to see the usage of statistics. If you see statistics has been used a lot then maybe a good idea to create an index on that column.

Do update statistics using FULL in off hours if possible. Sometimes updating on SAMPLE create more problems for the query. Sometimes full update is not possible on a Very Large Table. In that case, use filtered statistics. Never turn off auto update stats. But do run your own update statistics job regularly in a maintenance window. Auto update is there to catch if your job fails for some reason.

An index rebuild will update statistics and will invalidate plans in the cache. So be careful when you do it.

If you use a filtered index, use option recompile to use this index. You have to manage its update and it cannot depend on auto stats update.

Wednesday, 11-07-2018 sessions:

Session 2: Getting Better Query Plans by Improving SQL’s Estimates

Speaker: Brent Ozar

Abstract: You’ve been writing T-SQL queries for a few years now, and when you have performance issues, you’ve been updating stats and using OPTION (RECOMPILE). It’s served you well, but every now and then, you hit a problem you can’t solve. Your data’s been growing larger, your queries are taking longer to run, and you’re starting to wonder: how can I start getting better query plans? The secret is often comparing the query plan’s estimated number of rows to the actual number of rows. If they’re different, it’s up to you – not the SQL Server engine – to figure out why the guesses are wrong. To improve ’em, you can change your T-SQL, the way the data’s structured and stored, or how SQL Server thinks about the data. This session won’t fix every query – but it’ll give you a starting point to understand what you’re looking at, and where to go next as you learn about the Cardinality Estimator.

Prerequisites: You’re comfortable writing queries to get the data you want, but once you get a bad query plan, your only fix is OPTION RECOMPILE.

Error: spilling to disk- estimates are wrong maybe >10X magnitude.

Maxdop =1 option in the query for a single threaded demo.

SQL 2019 (latest)

Stackoverflow2013 DB. Open source scripts

Go to old compatibility mode. Get an estimated plan.

Statistics update help correct estimation.

If change the compatibility to SQL2017 still did not solve estimation error. It has new cardinality estimator (CE).

Changing to SQL2019 compatibility level, the same query resolves the spill problem. Estimate still wrong but memory grant is greater because it adds batch mode operator.

How you resolve it in 2017? Create statistics. Did not resolve. Because statistics was created on sample size, not full scan. Do full scan statistics, but Exec plan is same. So clean plan cache. Finally, it removes the spill error and correct estimate. But the query is still slow.

If you have a choice to create a statistic or index on a column, create index instead and will save you lot of work. Plus it will give you a sorted list.

Stream aggregate does the computation faster in buckets.

Read: Thomas Kejser, Ascending key problem

Thursday, 11-08-2018 sessions:

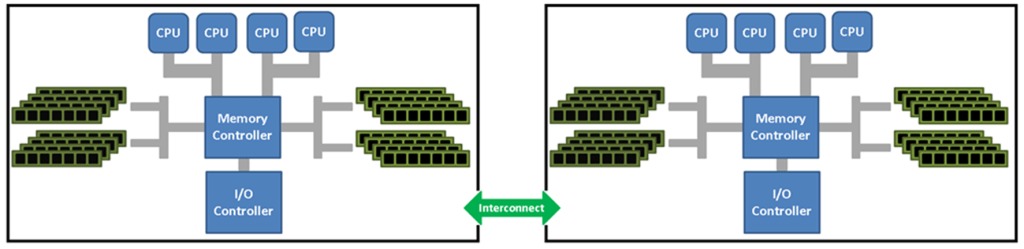

Session 1: Containers, Pods, and Databases- The Future of Infrastructure

Speakers: Joseph D’Antoni

Notes:

Containers have quietly been taking over the world of infrastructure, especially amongst developers and CI/CD practitioners. However, in the database space, container adoption has been lower.

SQL Server 2017 introduced the concept of deploying databases into Docker containers. In this session, you will learn the fundamentals of creating containers, learning about Kubernetes for management, and how to further your learning in this new and emerging space.

Kubernates is still young but robust. MS and Google contributing. It is v 1.0 product, not beta. A code will have to be stateful so it can keep track of which node it ran. Kubernates pos can contain spark + SQL Server. (0% deployment will be on Linux.

A container is a standardized unit of software that includes everything needed to run it. Fast to deploy, self-contained, upgrades are easy, scalable and multi-tenant.

In SQL 2019, AG is also available in Kubernates. Virtualization was not easily accepted in 2003 when it was new. Containers are same in 2018 now. But this is the future of infrastructure.

Containers:

They are isolated but share OS, bins\libraries. VM has full copy of Host OS. Host don’t share OS. With containers you can get more density on less usage utilization. Faster to deploy than VMs. Storage is persisted. Better destroy than VMs. They are licensed just as VMs. Either license separately or license one host and install as many.

Compute and data are separated. Hard to do it in RDBMS. You can scale them independently.

Demo: SQL server 2019 and Docker

- Docker pull (path)

- Sudo Docker run

Worked in SQL operational Studio. Not a replacement for SSMS.

Kubernates:

Initially developed by Google. Runs containerized application with HA. Greek word meaning a person who veers the ship. Frequently deployed in conjunction with Docker using contained service and Docker registry.

SQL Server and Kubernates:

Nodes per Kubernates. Each sql server and agent in its pod and in its node. There will be a master node in the cluster. Master runs the cluster. Nodes are individual hosts like VMs.

Session 3: Principle of Least Privilege: The Key to Strong Security

Speaker: Ed Leighton-Dick

Until now we have been putting more emphasis on network security so nobody can come inside and get our data. This is not enough anymore. 95% data breach happens with phishing. Next high is crypto jacking. This is not going to stop. Not only bad people outside but Inside Company who are malicious or going to work for competitors or just plain naïve. Till 2-3 years ago security was afterthought. Now it is forefront. In 2017, there were about 1500 reported data breach incidents in the US.

GDPR assumes that you will implement security and privacy built in the product not added afterwards. Requires huge investment.

Ed took 10 minutes, to explain why it is important and then started with what we can do. Gave an example of his experience where he was consulting, about a failure of security because everyone had full rights.

Watch for application service account with higher permissions.

Ideal security goal is that all accounts should be given permissions need to do the job and not more.

Applying least privilege for applications:

- Require granular permissions, find out what it does

Applying least privileges for Developers:

- They should not have access to production.

- Should not have access to administrative rights.

- Non-prod environment they should not have access to prod data.

Applying least permissions for DBA:

DBA should audit their own activity and ideal to have the least account and high account.

Ideally, there should be a security officer, not DBA and not a developer but manages keys. Even system administrator also should not have access to data.

Step 1: Analyze

Find out what vendor app does, what your app do, what your regulation requires you to do. Find out what is needed to be done not wanted to be done. What your users need to do. You may find them not to align.

Do Regular internal and external audits.

Step 2: Implementation

- SQL server 2014+ – custom server roles

- SQL server 2005 -2012 (separation of duties framework on CodePlex Archive)

[First demo of the session. Get the script from Ed’s blog.]

Execute as user

And run sys.fn_my_permissions.

Db_owner on DB can create sysadmin if trustworthy on db =1

Step 3: Repeat

It is not a onetime process, you have to periodically on a regular basis.

Friday, 11-09-2018 sessions:

Session 1: T-SQL Habits and Practices That Can Kill Performance

Speaker: Vern Rabe

- Union vs Union ALL

Union makes query unique by doing Sorts which is expensive. Run query with both to see execution plans.

- Using superfluous ORDER BY

If a query is only using columns in select that is covered by clustered index then list is already sorted. Run without it. See result of both. You will find order by was unnecessary.

- Not Joining on Keys

Usually, you want to join on keys. If not you may get duplicate. Then you will use duplicate which is a performance hit. The right way to do it is to use all the key (PK) columns in join predicate.

- Having too many JOINS

Optimizer figures out with an execution plan and comes up with good enough plans.

One way to solve the optimizer time out of big joins, break down into separate queries and store results in the temp tables and join the temp tables.

- Over-using or mis-using views

Views hide the complexity of join queries underneath. It starts with somebody writing a simple view then somebody comes and adds a few extra columns from another table. Make sure what is in the view before using the column in your query, maybe directly querying base table will be cheaper. Do not reference view in a view. Bad idea. Join view with a view increases complexity.

- User defined function in a predicate

Example month(F.orderdate). A month is not user-defined but is system defined.

Create a UDF using month(F.orderdate) built-in function.

Run both queries. Both plans are the same. Both are doing clustered index scan but hover over row arrow shows different numbers showing the second one is doing lot more work in the beginning and then filter out. Don’t rely on execution percentage for both queries for comparison.

- Ignoring the transaction logs

Insert and update touches tlog. Anything you can do touch the diskless will improve the performance. Example. Insert into temp table from a regular table and check using sys.fn_dblog and then in next query run an update on temp table and verify using fn_dblog. Out of 200 rows only 5 updated, the first query will update all rows. The second query will only update matching the criteria and hence less written to tlog.

His stored procedure creates where clauses very easily. His script contains this stored proc. Using EXCEPT results in the same output.

- Ignoring data types

Mis-matching data types in predicate will cause implicit and explicit conversions. He used a number table with 0.5 million rows. In one table column is int and another table column is char. Now compare sp_spaceused of both tables. The second table will be bigger and size will affect the query performance. Duration of both queries will differ as you can see the Select properties (Query time stats) in execution plans.

- Not using OPTION (RECOMPILE)

It has special uses. When the first time you execute SP, optimizer comes up with a plan. The second time it uses the plan, good thing because it saves time. Recompile will ask for a new plan each time. If a cached plan is small values but next time your passing parameter with large number of rows, a previous plan will work badly. Analyze the parameter value range and decide if you want to use recompile. Every single statement in the SP will get a plan. Not all need to be a recompile. Sp_recompile and With recompile will recompile the entire SP. The nested loop works great when one of the join tables has small no. of rows.

- Not making predicates SARGABLE (Able to search by Argument).

Helps optimizer to select indexes.

Where number < @i+1 (Sargable)

Where number -1 < @I (32K pages read vs 3 pages)

Session 2: Columnstore Clustered vs Nonclustered, When to Choose What

Speaker: Niko Neugebauer

Index Types: Clustered, physical structure and all columns are sorted. NC can be 999 per table.

Columnstore indexes: Rowstore is horizontal (clustered + nonclustered). Page and row compressions available. CS compresses data for each column hence can get more compression. Only one ColumnStore (CS) index allowed either clustered or non-clustered.

OLTP Architecture: insert, update and delete load, no CS index should be created because it will not benefit.

Operational analytics: need 1 columnstore index. 1 Non-clustered (NCCI) is needed.

DW: – 1 Clustered Columnstore Index (CCI)

BI: – hybrid, you have to think and do trial and error.

SQL 2012 had NCCI for DW. Could not update them unless you are switching the partition. No OLTP support

In SQL 2014, got CCI with updatable. Batch execution mode available. But still for DW only. Cannot use any rowstore indexes with CCI only with NCCI.

In SQL 2016, NCCI becomes updatable. More restriction for DW, CDC, CT supported only got NCCI. NCCI can be filtered. Support for replication, indexed views (not documented) added. Got OLTP NCCI, in-memory (Hekaton). Not CCI. Natively compiled SP does not support CCI

In SQL 2017, LOBs data type support is finally included CCI. Computed column supported.

SQL Server 2019 included an online rebuild of CCI. Expect 2 to 3 times execution time difference because optimizer will use delta store. If it takes 1 hour to rebuild offline, It will take 3 or 4 hours online.

Session 4: Lightning Talks: DBA

This Lightning Talk session will be comprised of five 10-minute talks on SQL Server, DevOps, TempDB Performance, Troubleshooting and more! Sessions and Speakers will include:

- “SQL Server Performance Troubleshooting: Bottom to Top… or Top to Bottom?” Andy Yun

- “How High Is Your Availability?” Allan Hirt

- “The Top Five Things DBAs Need to Know About DevOps” Hamish Watson

- “Evil Indexes Reducing Performance of SELECT” Pinal Dave

- “SQL Server’s Most Wanted: Five Common Vulnerabilities” Ed Leighton-Dick

Allan Hirt:

Fedex example: Due to virus cost 300 million dollars. Hurricane mother nature outages. 3 short stories to make a point of what he is going to say.

It’s about the requirement. He asks the business what you are trying to achieve. He wants to make sure why they want something is clear to them.

Technology can provide business continuity but should not drive it. How much risk business is ready to take? This answer should be on paper in terms of RPO and RTO.

Keep systems simple, recovery will be faster. Do not implement anything you are not ready to monitor and support. Automation breaks down so have a plan B.

His main point was define availability requirements first.

Andy Yun:

What is a proper performance method? Driving to work analogy.

He used a lot of his driving emotional pictures and made a connection that who like to be stuck in a traffic and similarly we don’t like to be stuck behind slow queries. What is the root cause of congestion? Sp_whoisactive he used. This is one way to look at the query. Look at it differently like look at the traffic from a helicopter to see what is causing it. Take top down approach. Look at server level what is going on. Such as resource monitor or perf monitor. Use tools like SentryOne. It tells you the high level wait type and then looks at closer to a database. Narrow it down to wait types and queries.

Hamish Watson:

We don’t trust that’s why we do manual things. We do not use source control consistently. Application changes can break our database.

In SSMS, it is hard to use Source Control but VS does. tSQLT is a free unit testing tool. SSDT allows you to incorporate TSQL unit testing.

Continuous Integration (CI) – each check-in is verified by an automated process. This allows for continuous delivery (CD).

Culture is very important. Because this process needs partnership not silos.

Pinal Dave:

He showed an example where Select query improves when too many indexes created. But results in slow down when Deletes, insert and updates happen. He says SELECT query will not only run slower, if there is an index and optimizer does not choose it for some reason but unused indexes actually adversely affect the execution compared to if there was no index at all.

Ed Leighton_Dick:

Patching, logging, auditing all actions are good and needed. The best defense against SQL Injection is to use safeguarded stored procedures.

I hope you find these notes useful. I apologize for lot of grammatical and shorthand mistakes in my notes.